Wheat is the main UK arable grain crop with around 2,000,000 hectares grown but has an estimated 5-20% of annually average yield lose due to pests. In 2020, AHDB reports that the 5-year average yield estimate for winter wheat in the UK is 8.4t/ha, where it has a value of about £2.3 billion with an average price at about £140 per tonne. While pesticides are often applied to crops to provide protection against pest damage, their applications are often done on an insurance basis rather than a prescriptive basis. With sustainable soil health management becoming more important, there is increasing demand for cost-effective wheat pest management solutions that can help farmers grow more sustainably with fewer chemical inputs and reduced soil erosion.

Equipment for Wheat pest monitoring: Regarding existing products of auto-monitoring and prediction of wheat PEST in global market, they are mostly stationary pest monitoring facilities, which relies on two types of pheromone trap technologies: the lure of lights and the lure of sex pheromones. They will first deploy a number of stationary pest trap devices in the different locations of a wheat growing field, then manually count and recognize the number and types of wheat pests killed by these devices in a day, finally estimate and predict the trend of wheat PEST by analysing this longitudinal information.

AI based techniques for automatic pest detection: Relying on above wheat PEST monitoring devices, there have been several efforts to apply artificial intelligence (AI techniques into developing automatic pest detection and monitoring system, mainly including:

- Pest traps images analysis approaches has focused on plant leaf characteristics for signs of damage. They provide an opportunity to recognize infestation problems before signs of leaf damage arise.

- Mobile pest images analysis approaches focused on pheromone traps but require special hardware to obtain images whereas our system can work on images captured simply from smartphone cameras.

Technically, these above two approaches require utilising typical object detection techniques with handcrafted representative features extractions and model training, like Principal Component Analysis (PCA), RGB multispectral analysis, support vector machine, K-nearest neighbours, etc. While these approaches achieved success to some extent, their results rely too much on quality of handcrafted features selection. Towards large-scale multi-class insect dataset, they are hardly used in practical wheat PEST monitoring applications, since the process of selecting and designing features is laborious and insufficient to represent all aspects of the insects.

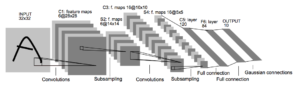

Another promising progress in this field recently is the emergence of deep learning techniques, like CNN or RCNN, where they have exhibited superior capacities in learning invariance in multiple object categories from large amounts of training data.

Recent work that has applied object detection to pest recognition has utilized one-stage or two-stage methods, like LeNet, SSE, and PestNet. Notably, PestNet developed our team at Sheffield is a two stage CNN based pest monitoring approach using hybrid global and local activated feature for large-scale multi class pest dataset (16 different types of wheat pest).

It is one of the best deep learning based wheat pest recognition models in the world, where PestNet received the Best Paper Award in the 19th IEEE International Conference on Industrial Informatics (No 1 out of 428 papers).